Attention Mechanism and Transformers#

Overview#

You will learn the basics of Attention Mechanism and Transformers, implementing a Vision Transformer

Goals#

Learn about Attention Mechanism and Transformers

Why Attention and not Convolutions?

Patch Embeddings (Conversion of Images to “sequences”)

Understanding CLS tokens and Positional Embeddings

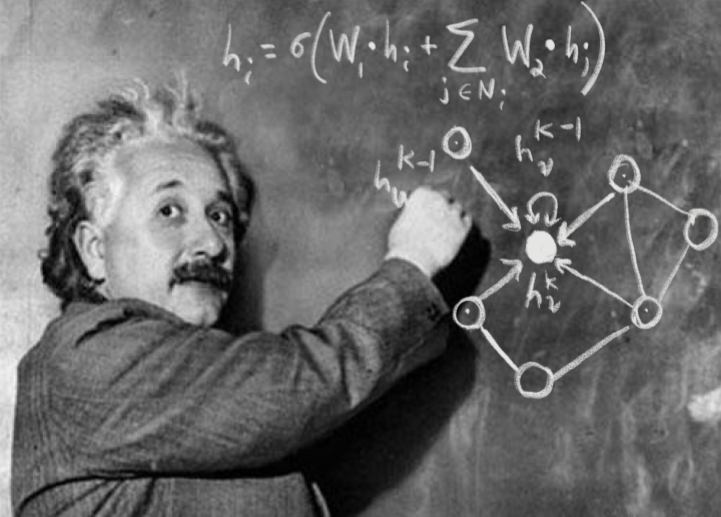

How to build a single Attention Head (Q, K, V)

Expanding Single Headed Attention to MultiHeaded Attention

The purpose of Layer Normalization

Lecture Materials#

Homework Assignment#

None

Supplemental Readings#

Neural Machine Translation By Jointly Learning To Align and Translate

Transformer: A Novel Neural Network Architecture for Language Understanding (Jakob Uszkoreit, 2017) - The original Google blog post about the Transformer paper, focusing on the application in machine translation.