Deep Learning#

%matplotlib inline

import matplotlib.pyplot as plt

import seaborn as sns; sns.set_theme()

import numpy as np

import pandas as pd

import os.path

import subprocess

import matplotlib.collections

import scipy.signal

from sklearn import model_selection

import warnings

warnings.filterwarnings('ignore')

from math import ceil

from sklearn.metrics import (

accuracy_score, precision_score, recall_score,

roc_auc_score, average_precision_score

)

import torch

from torch import nn

from torch.utils.data import TensorDataset, DataLoader

def wget_data(url: str):

local_path = './tmp_data'

p = subprocess.Popen(["wget", "-nc", "-P", local_path, url], stderr=subprocess.PIPE, encoding='UTF-8')

rc = None

while rc is None:

line = p.stderr.readline().strip('\n')

if len(line) > 0:

print(line)

rc = p.poll()

def locate_data(name, check_exists=True):

local_path='./tmp_data'

path = os.path.join(local_path, name)

if check_exists and not os.path.exists(path):

raise RuxntimeError('No such data file: {}'.format(path))

return path

Get Data#

wget_data('https://raw.githubusercontent.com/illinois-mlp/MachineLearningForPhysics/main/data/circles_data.hf5')

wget_data('https://raw.githubusercontent.com/illinois-mlp/MachineLearningForPhysics/main/data/circles_targets.hf5')

wget_data('https://raw.githubusercontent.com/illinois-mlp/MachineLearningForPhysics/main/data/spectra_data.hf5')

File ‘./tmp_data/circles_data.hf5’ already there; not retrieving.

File ‘./tmp_data/circles_targets.hf5’ already there; not retrieving.

File ‘./tmp_data/spectra_data.hf5’ already there; not retrieving.

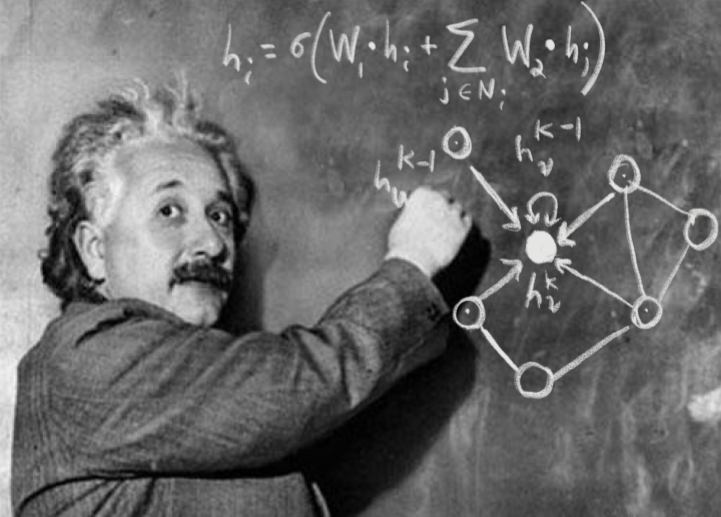

Neural Network Architectures for Deep Learning#

We previously looked at the basic building blocks of a neural network. Here we will put it together to show the process of training and evaluating a simple neural network that, for networks with additional layers, would illustrate deep learning.

We will later look at some other novel architectures that are currently driving the deep-learning revolution:

Convolutional networks

Recurrent networks

We conclude with some reflections on where “deep learning” is headed.

We learned about tensorflow and PyTorch last time. Here, will focus on PyTorch, which has become the more common toolbox for deep learning.

Reading Data#

First, we create a dataset where we split the circles data into train(400) and test (100) datasets.

X = pd.read_hdf(locate_data('circles_data.hf5'))

y = pd.read_hdf(locate_data('circles_targets.hf5'))

X_train, X_test, y_train, y_test = model_selection.train_test_split(

X, y, test_size=100, random_state=123)

# Convert to NumPy float32

Xtr = X_train.astype("float32").to_numpy()

ytr = y_train.squeeze().astype("float32").to_numpy()

Xte = X_test.astype("float32").to_numpy()

yte = y_test.squeeze().astype("float32").to_numpy()

Check the array sizes

print(type(Xtr), Xtr.shape, Xtr.dtype)

print(type(ytr), ytr.shape, ytr.dtype)

<class 'numpy.ndarray'> (400, 2) float32

<class 'numpy.ndarray'> (400,) float32

Let’s set some Torch variables and DataLoader

device = torch.device("cpu") # or "cuda" if available and desired

Xtr_t = torch.from_numpy(Xtr)

ytr_t = torch.from_numpy(ytr)

Xte_t = torch.from_numpy(Xte)

yte_t = torch.from_numpy(yte)

batch_size = 50

train_ds = TensorDataset(Xtr_t, ytr_t)

g = torch.Generator().manual_seed(123)

train_loader = DataLoader(train_ds, batch_size=batch_size, shuffle=True, generator=g)

Define the Model#

Let’s define a simple model

D = Xtr.shape[1]

model = nn.Sequential(

nn.Linear(D, 4),

nn.Sigmoid(),

nn.Linear(4, 1),

nn.Sigmoid(),

).to(device)

Set the Optimizer#

Define the optmizer. Here we use Adagrad, which is gradient descent with a learning rate that adapts based on past gradients (alternatively, we could could use Adam like before). For the loss function, we use binary classification entropy (BCE).

optimizer = torch.optim.Adagrad(

model.parameters(),

lr=0.05,

initial_accumulator_value=0.1,

eps=1e-10,

)

criterion = nn.BCELoss(reduction="mean") # average loss per batch

Train the model#

Let’s train the model

torch.manual_seed(123)

np.random.seed(123)

# Train for 5000 updates

steps_per_epoch = ceil(len(Xtr) / batch_size)

target_steps = 5000

epochs = ceil(target_steps / steps_per_epoch)

model.train()

global_step = 0

for epoch in range(epochs):

for xb, yb in train_loader:

xb = xb.to(device)

yb = yb.to(device).view(-1, 1)

# forward propagation

probs = model(xb) # (B,1) after final Sigmoid

loss = criterion(probs, yb)

# backward propagation

optimizer.zero_grad()

loss.backward()

optimizer.step()

global_step += 1

if global_step >= target_steps:

break

if global_step >= target_steps:

break

Performance Evaluation#

Let’s look at some performance metrics evaluated on the test data set

model.eval()

with torch.no_grad():

probs_te = model(Xte_t.to(device)).cpu().numpy().squeeze() # (N_test,)

# Thresholded predictions at 0.5

preds_te = (probs_te >= 0.5).astype(np.float32)

# Core metrics

acc = accuracy_score(yte, preds_te)

prec = precision_score(yte, preds_te, zero_division=0)

rec = recall_score(yte, preds_te, zero_division=0)

# AUCs (need both classes present; handle edge cases)

try:

auc_roc = roc_auc_score(yte, probs_te)

except ValueError:

auc_roc = float("nan")

try:

auc_pr = average_precision_score(yte, probs_te)

except ValueError:

auc_pr = float("nan")

# Binary cross-entropy on test set (mean and sum)

eps = 1e-7

p = np.clip(probs_te, eps, 1 - eps)

avg_loss = float(-np.mean(yte * np.log(p) + (1 - yte) * np.log(1 - p)))

sum_loss = float(avg_loss * len(yte))

label_mean = float(np.mean(yte))

pred_mean = float(np.mean(probs_te))

acc_base = float(max(label_mean, 1.0 - label_mean))

metrics = {

"accuracy": float(acc),

"accuracy_baseline": acc_base,

"auc": float(auc_roc),

"auc_precision_recall": float(auc_pr),

"average_loss": avg_loss,

"label/mean": label_mean,

"loss": sum_loss,

"precision": float(prec),

"prediction/mean": pred_mean,

"recall": float(rec),

"global_step": int(global_step),

}

print(metrics)

{'accuracy': 1.0, 'accuracy_baseline': 0.5299999713897705, 'auc': 1.0, 'auc_precision_recall': 1.0, 'average_loss': 0.31887248158454895, 'label/mean': 0.5299999713897705, 'loss': 31.887248158454895, 'precision': 1.0, 'prediction/mean': 0.5392262935638428, 'recall': 1.0, 'global_step': 5000}

We can also look at the weights and biases in each layer

first_linear = model[0] # nn.Linear(D,4)

W1 = first_linear.weight.detach().cpu().numpy() # shape (4, D)

b1 = first_linear.bias.detach().cpu().numpy() # shape (4,)

second_linear = model[2] # nn.Linear(4,1)

W2 = second_linear.weight.detach().cpu().numpy() # shape (1, 4)

b2 = second_linear.bias.detach().cpu().numpy() # shape (1,)

print("First layer W (shape {}):\n".format(W1.shape), W1)

print("First layer b (shape {}):\n".format(b1.shape), b1)

print("Second layer W (shape {}):\n".format(W2.shape), W2)

print("Second layer b (shape {}):\n".format(b2.shape), b2)

for j in range(W1.shape[0]): # 4 hidden units

print(f"\nHidden unit {j}:")

for i, col in enumerate(X_train.columns):

print(f" {col:20s} {W1[j, i]: .6f}")

First layer W (shape (4, 2)):

[[ 2.3064497 3.0436256]

[-1.8661021 2.0942287]

[ 2.8583496 1.2027841]

[-0.7847262 3.7772076]]

First layer b (shape (4,)):

[-3.4552228 -2.6118317 2.3626225 2.7051387]

Second layer W (shape (1, 4)):

[[-3.1733155 -1.9705482 1.1891489 2.1748834]]

Second layer b (shape (1,)):

[-1.3059076]

Hidden unit 0:

x0 2.306450

x1 3.043626

Hidden unit 1:

x0 -1.866102

x1 2.094229

Hidden unit 2:

x0 2.858350

x1 1.202784

Hidden unit 3:

x0 -0.784726

x1 3.777208

Deep Learning Outlook#

The depth of “deep learning” comes primarily from network architectures that stack many layers. In another sense, deep learning is very shallow since it often performs well using little to no specific knowledge about the problem it is solving, using generic building blocks.

The field of modern deep learning started around 2012 when the architectures described above were first used successfully, and the necessary large-scale computing and datasets were available. Massive neural networks are now the state of the art for many benchmark problems, including image classification, speech recognition and language translation.

However, less than a decade into the field, there are signs that deep learning is reaching its limits. Some of the pioneers and others are taking a critical look at the current state of the field:

Deep learning does not use data efficiently.

Deep learning does not integrate prior knowledge.

Deep learning often give correct answers but without associated uncertainties.

Deep learning applications are hard to interpret and transfer to related problems.

Deep learning is excellent at learning stable input-output mappings but does not cope well with varying conditions.

Deep learning cannot distinguish between correlation and causation.

These are mostly concerns for the future of neural networks as a general model for artificial intelligence, but they also limit the potential of scientific applications.

However, there are many challenges in scientific data analysis and interpretation that could benefit from deep learning approaches, so I encourage you to follow the field and experiment. Through this course, you now have a pretty solid foundation in data science and machine learning to further your studies toward more advanced and current topics!

Acknowledgments#

Initial version: Mark Neubauer

Updates: Aaron Pearlman

© Copyright 2026