Precision Neutron Counting with Tail Pulse Pileup Rejection#

Overview#

From: Fornal, B. Neutron Dark Decay. Universe 2023, 9, 449. https://doi.org/10.3390/universe9100449

Introduction to the physics#

Free neutrons will spontaneously undergo beta decay into a proton, electron, and electron anti-neutrino. The most precise measurements of the neutron lifetime are currently held by two different experimental methods shortened to beam and bottle measurements. The beam measurement is done by sending a beam of thermal neutrons through a penning trap and counting the exact number of decayed protons left in the trap and the number of neutrons that leave the trap. By knowing the time of flight, one can back out the neutron lifetime. The bottle measurement is done by collecting ultra cold neutrons in a magneto-gravitational trap, waiting a specified time, then counting the remaining neutrons with a detector. These two measurement techniques have produced lifetimes which disagree by about ten seconds translating to a 4.3 sigma discrepancy. This large difference constitutes the neutron lifetime puzzle which the next generation upgrades to the beam and bottle method are looking to solve. Pictured below is the beam method studied in this project.

From BL3 NSF presentation

The bottle method has managed to get 10^-1 second scale accuracy in their measurement while the current generation beam measurement has second scale accuracy. The next generation beam experiment, BL3, aims to reduce this uncertainty to better than 0.4 second accuracy. To achieve this, an improved calibration system for counting the neutrons coming out of the trap is being developed at Illinois called the Alpha-Gamma 2.0 system. By counting alpha particles and gamma rays produced in the capture of neutrons from the beam incident on a boron or lithium foil target, one can deduce a calibration scheme for the neutron counters.

The alphas and gammas are counted by planar silicon detectors and germanium detectors respectively. These semiconductor detectors create electron and electron hole pairs with total energy proportional to the incident radiation. The sum of these charges creates a delta function pulse that is fed into a preamplifier to bring the signal up to the hundreds of mV range. However, the preamplifier changes the shape of this pulse from a delta function into a tail pulse which has a sharp rise (nanosecond scale) on the left and an exponential decay (microsecond scale) on the right. Finally, the tail pulses are fed into a digitizer which converts the analog signal into a digital trapezoidal shape which is easier to use in computer processing and reduces noise.

We know what energy to expect from the alphas and gammas in the experiment, so the challenge is to precisely count the number of events in each detector in the specified energy range. One issue is that if two particles deposit their energy within a short time, the resulting tail pulses and trapezoids stack up on one another (pile-up) making the resulting shape difficult to analyze. The focus of this project will be on detecting and resolving the energies of these piled up trapezoids for the precise counting of neutron captures using data from test radiation sources such as cobalt-60.

Questions 1 & 2: Understanding BL3#

Question 1 - Why do we care about the neutron lifetime?#

From this brief article or from any other articles you might dig up (good searches are usually: “neutron lifetime”, “neutron lifetime puzzle”, etc.), summarize in your own words what other physics depends on the free neutron lifetime. What other fields or researchers might be interested in this quantity? Link your own sources below.

Question 2 - Why is energy resolution important?#

For both the alpha and gamma detectors, we take a specific cut of the energy spectrum and only count events in this range since our event energies are monoenergetic (478 keV gammas and 2.31 MeV alphas). Assuming these detectors are inside a stainless steel vacuum chamber, which detector will experience more background events? Reference either the wikipedia article on radiation or another source of your choice. What physics signal from this background might bleed into our narrow energy range? (Hint: This effect is common to all spectra of this type. Perhaps look online for features of radioactive spectra.) This is a precision measurement experiment so it’s important to minimize background and understand the origin of it.

After going through some of the introduction to pile-up, how might the detected events we are interested in leak out of the energy cut if uncorrected? This happens in both detectors unlike the background.

Introduction to trapezoidal data#

To extract precise lifetime from the BL3 experiment, we need to extract multiple particle decay rates with precision better than 0.01%. However, real signals may be distorted by noise and pile-up events, which hinder its ability to accurately resolve pulses. Therefore, instead of extracting directly off the peak height, a trapezoidal filter is incredibly useful in this purpose.

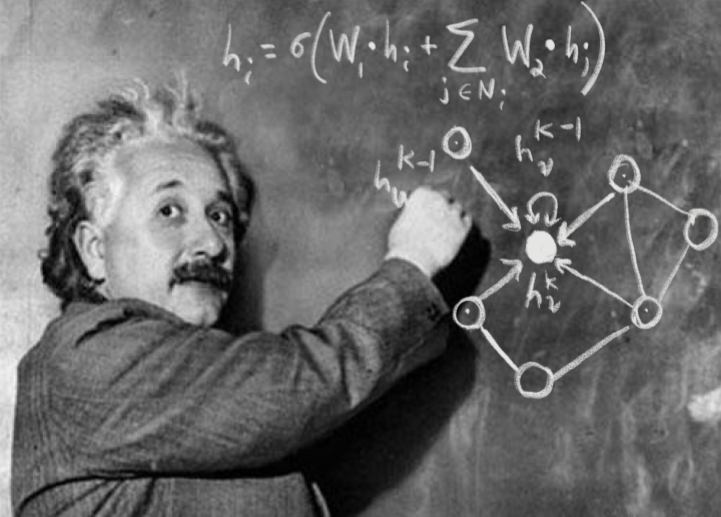

The trapezoidal filter is a widely used digital signal processing technique in nuclear and particle physics experiments, particularly for shaping and analyzing signals from charge-sensitive preamplifiers. Its main purpose is to extract energy information from pulses while minimizing noise and preserving timing resolution. The filter can be modeled as follows:

From Ref [1], Equations (28) and (29)

Where \( s(n) \) is the system response, \(v(n)\) is the sample of the signal at time \(n\), \(l\) is the length of the convolution, and \(k\) is a second length parameter [1].

The filter’s output resembles a trapezoid, characterized by a linear rise, a flat top, and a linear fall. Key parameters influencing its performance include the peaking time (or rise time), which determines how quickly the signal reaches the flat top and affects noise suppression and resolution; the gap time, which defines the width of the flat top and allows for ballistic deficit correction; and the decay time correction, or energy correction, which compensates for exponential decay in the preamplifier output, ensuring accurate amplitude measurement. Together, these parameters must be carefully tuned to balance energy resolution, noise rejection, and signal pile-up recovery based on the specific characteristics of the detector and electronics.

While the trapezoidal filter is effective at shaping pulses and improving energy resolution, it does not inherently resolve pulse pile-up, a common issue in high-rate environments where two or more events occur within a short time window. When pile-up occurs, the resulting waveform becomes a superposition of overlapping pulses, which the trapezoidal filter may interpret as a single distorted pulse, leading to inaccurate timing and energy estimation. To address this limitation, machine learning (ML) models, particularly recurrent neural networks (RNNs) and convolutional neural networks (CNNs), offer powerful alternatives. CNNs excel at recognizing local patterns in waveforms, such as distinguishing overlapping peaks, while RNNs can capture temporal dependencies to model the sequence of pulses over time. By training these models on labeled waveform data, they can learn to accurately identify the number of peaks, estimate each pulse’s timing, and recover the true energy of individual events—even in complex pile-up scenarios. This approach can significantly enhance signal processing performance beyond the capabilities of traditional trapezoidal filtering.

Shown below is the effect of pile-up where the left pulse is alone, but the right pulse is piled up with a secondary pulse spaced close together.

From: Yang et al. Efficient pile-up correction based on pulse-tail prediction for high count rates, 2022, https://doi.org/10.1016/j.nima.2022.166376

Part 2: Understanding the Trapzeoidal Filter#

Question 3 - Advantage of trapezoids#

Briefly explain in your own words what a trapzeoidal filter is. Take a guess at the advantage of trapezoidal filters for resolving the energy spectrum of a detector compared to using only the peak height. (Hint: These filters are designed for real detectors that aim to make precision measurements using analog real-world components. What real-world effects would complicate this process?)

Question 4 - Parameter estimation#

Shown below is a simulated pulse before convolution in blue and the resulting trapezoid produced from the convolution in the equation above.

From this image, approximate the energy, peaking time, and gap time of the trapezoidal filter. Give a brief description of each parameter.

Part 3: Pre-ML Approach to Data Analysis#

One way to analyze the tail-pulse data a without trapezoidal filter and without machine learning is through pulse fitting. We can use the gradient of the tail-pulse to “guess” the number of pulses, and perform a curve fit on the sum of overlapping asymmetric pulses. Here, each pulse is described by a function characterized by a rise time (tr), a decay time (td), an arrival time (x0), and an amplitude (A), where td and tr are shared among all pulses within a fit.

With successful fitting, we can then histogram the amplitudes to obtain the energy distribution. However, there are a couple of challenges:

Pulse model selection is important as it influences the accuracies and the resolutions of the fitting outcomes.

Pulse detection remains a challenge especially with pile-ups, as the convoluted gradient hides the information on the number of pulses existing.

Before dealing with those challenges, let’s try implementing this algorithm. We use this pulse model:

Below is how analysis is done on a single pulse from actual data acquired. We have to correct the baseline due to measurement setting.

In order to improve the accuracy to estimate the number of pulses with noise, we use a low-pass filter applied onto the waveform and stored it in data_grad. We use this filtered waveform to extract gradient while performing the fitting on the original waveform (passed thorugh 1 Gaussian filter).

Plot the waveform, its gradient, and print the energy value.

(Note: for this part, we use the actual waveform obtained in experiment)

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

import glob

from scipy.optimize import curve_fit, least_squares

from scipy.signal import savgol_filter, find_peaks

from scipy.ndimage import gaussian_filter1d

from tqdm.notebook import tqdm

plt.style.use('seaborn-v0_8')

# Import the data

import gdown

file_id = '1OF9F9_JQ4YygE3FcPWBtNBSLpl1XxuFg'

file_name = 'trial_9.npy'

gdown.download(f'https://drive.google.com/uc?id={file_id}', file_name, quiet=False)

data = np.load(file_name)

data = data[:10000] # We take first 10000 data for faster runtime

data_grad = savgol_filter(data, window_length=50, polyorder=3, axis=1)

data = gaussian_filter1d(data, sigma=1.8, axis=1)

def pulse(x, x0, td, tr, A): # single pulse

y = np.zeros_like(x)

y = A * np.exp(-(x-x0) / td) / (1 + np.exp(-(x-x0) / tr))

return y

def detect_pulses_grad_fast(waveform, grad_threshold=1.7, window=10):

grad = np.gradient(waveform)

kernel = np.ones(window)

conv = np.convolve(grad > grad_threshold, kernel, mode='same')

peaks, _ = find_peaks(conv, height=window)

return peaks

def multi_pulse_shared_tr_td(x, *params):

td = params[0]

tr = params[1]

N = (len(params) - 2) // 2

x0s = params[2:2 + N]

As = params[2 + N:]

y = np.zeros_like(x)

for x0, A in zip(x0s, As):

y += A * np.exp(-(x-x0) / td) / (1 + np.exp(-(x-x0) / tr))

return y

def fit_waveform_multi(waveform, smooth_grad, index=None):

x = np.arange(len(waveform), dtype=float)

residual = waveform.copy()

baseline = int(np.mean(residual[:200]))

residual = waveform - baseline

peak_indices = detect_pulses_grad_fast(smooth_grad)

delta_x0 = 50

filtered = [(idx, residual[idx] - residual[max(0, idx - delta_x0)]) for idx in peak_indices]

filtered = [(idx, A0) for idx, A0 in filtered if A0 > 0]

if len(filtered) == 0:

return [], [], residual, -100000

peak_indices, A0s = zip(*filtered)

peak_indices = list(peak_indices)

A0s = list(A0s)

p0 = [11508, 15] + list(peak_indices) + list(A0s)

N = len(peak_indices)

x0_lowers = [max(0, idx - delta_x0) for idx in peak_indices]

x0_uppers = [min(len(waveform), idx + delta_x0) for idx in peak_indices]

bounds_lower = [1e3, 1] + x0_lowers + [0] * N

bounds_upper = [1e5, 200] + x0_uppers + [5e3] * N

try:

popt, _ = curve_fit(multi_pulse_shared_tr_td, x, residual,

p0=p0, maxfev=5000, bounds=(bounds_lower, bounds_upper))

except Exception as e:

print("Fitting failed:", e)

return [], [], residual, -100000

fitted_waveform = multi_pulse_shared_tr_td(x, *popt)

residual = residual - fitted_waveform

td, tr = popt[0], popt[1]

x0s, As = popt[2:2+N], popt[2+N:]

pulses = [(x0, td, tr, A) for x0, A in zip(x0s, As)]

return pulses, popt, residual, baseline

index = 0

pulses, popt, residual, baseline = fit_waveform_multi(data[index], data_grad[index])

print(pulses)

print(popt)

print(residual)

print(baseline)

# Plot out the waveform, its gradient, and print the energy

Now, the code below can find the energy distribution from looping over all the waveforms. Because the gradient depends on the energy, some of the events on the lower energy side of the spectrum would fail to fit. Ignore those.

# Gamma

def find_energy():

all_pulses = []

all_tds = []

all_trs = []

all_energies = []

for i, (raw, grad) in tqdm(enumerate(zip(data, data_grad)), total=len(data)):

pulses, popt, residual, baseline = fit_waveform_multi(raw, grad, i)

if baseline == -100000:

continue

all_pulses.append(pulses)

if popt is not None and len(popt) >= 2:

all_tds.append(popt[0])

all_trs.append(popt[1])

for p in pulses:

all_energies.append(p[-1]) # Assuming p[-1] is the amplitude

# Histogram 1: Full energy range

plt.figure(figsize=(6, 4))

energy_bins = np.arange(min(all_energies), max(all_energies))

energy_hist, energy_edges = np.histogram(all_energies, bins=energy_bins)

plt.hist(all_energies, bins=energy_bins, edgecolor='k')

plt.xlabel('Pulse Amplitude (Energy)')

plt.ylabel('Counts')

plt.title('Energy Spectrum')

plt.yscale('log')

plt.tight_layout()

plt.show()

# Histogram 2: Zoomed to mean (-1.5 std, +5 std)

plt.figure(figsize=(6, 4))

zoom_bins = np.arange(int(np.mean(all_energies) - 1.5*np.std(all_energies)),

int(np.mean(all_energies) + 5*np.std(all_energies)))

zoom_hist, zoom_edges = np.histogram(all_energies, bins=zoom_bins)

plt.hist(all_energies, bins=zoom_bins, edgecolor='k')

plt.xlabel('Pulse Amplitude (Energy)')

plt.ylabel('Counts')

plt.title('Zoomed Energy Spectrum')

plt.yscale('log')

plt.tight_layout()

plt.show()

# Histogram: td

plt.figure(figsize=(6, 4))

td_bins = np.linspace(min(all_tds), max(all_tds), 50)

td_hist, td_edges = np.histogram(all_tds, bins=td_bins)

plt.hist(all_tds, bins=td_bins, edgecolor='k')

plt.xlabel('Decay Time (td)')

plt.ylabel('Counts')

plt.title('Distribution of td')

plt.yscale('log')

plt.tight_layout()

plt.show()

# Histogram: tr

plt.figure(figsize=(6, 4))

tr_bins = np.linspace(min(all_trs), max(all_trs), 50)

tr_hist, tr_edges = np.histogram(all_trs, bins=tr_bins)

plt.hist(all_trs, bins=tr_bins, edgecolor='k')

plt.xlabel('Rise Time (tr)')

plt.ylabel('Counts')

plt.title('Distribution of tr')

plt.yscale('log')

plt.tight_layout()

plt.show()

find_energy()

Now, try changing the fitting pulse shape to:

and find the energy distribution.

def pulse(x, x0, td, tr, A):

y = np.zeros_like(x)

# Implement this

raise NotImplementedError()

def multi_pulse_shared_tr_td(x, *params):

td = params[0]

tr = params[1]

N = (len(params) - 2) // 2

x0s = params[2:2 + N]

As = params[2 + N:]

y = np.zeros_like(x)

for x0, A in zip(x0s, As):

# Implement this

raise NotImplementedError()

index = 0

pulses, popt, residual, baseline = fit_waveform_multi(data[index], data_grad[index])

print(pulses)

print(popt)

print(residual)

print(baseline)

# Plot out the waveform, its gradient, and print the energy

find_energy()

Part 4: Understanding the Dataset by ML#

import numpy as np

import matplotlib.pyplot as plt

from sklearn.ensemble import RandomForestRegressor, RandomForestClassifier, GradientBoostingClassifier, GradientBoostingRegressor

from sklearn.metrics import mean_absolute_error, accuracy_score

import pandas as pd

import ast

from collections import Counter

from matplotlib.colors import LogNorm

from sklearn.multioutput import MultiOutputRegressor

plt.style.use('seaborn-v0_8')

For ML Implementation, we will break the challenge into two different parts: finding the number of pulses and finding the energies.

(Note: for this part, for simplicity, we will use the waveforms obtained from simulation)

Question 5 - An ML approach#

For this step, we will train a model to find the number of pulses from the signal.

To begin with, we can read the data in through:

https://drive.google.com/file/d/1_0vTiUCjNfjSs9GaBBn0pQFWk0DEkpn6/view?usp=sharing

# Import the data

import gdown

file_id = '1_0vTiUCjNfjSs9GaBBn0pQFWk0DEkpn6'

file_name = 'data_set2.parquet'

gdown.download(f'https://drive.google.com/uc?id={file_id}', file_name, quiet=False)

data = pd.read_parquet(file_name)

print(data.head())

for i in range(1, 10):

print(f"Events with {i} pulse(s): ", len(data[data['num_pulses'] == i]))

The dataframe is a little bit tricky to deal with. We will provide the code to separate it into training set and testing set here:

train_data = pd.DataFrame()

test_data = pd.DataFrame()

for i in range(1, 7):

train_data = pd.concat([

train_data,

data[data['num_pulses'] == i].iloc[0:int(len(data[data['num_pulses'] == i])*0.8)]

])

test_data = pd.concat([

test_data,

data[data['num_pulses'] == i].iloc[int(len(data[data['num_pulses'] == i])*0.8):]

])

X_train = np.array([row["waveform"] for _, row in train_data.iterrows()])

y_train = np.array(train_data["num_pulses"])

y_train_cls = y_train.astype(int)

X_test = np.array([row["waveform"] for _, row in test_data.iterrows()])

y_test = np.array(test_data["num_pulses"])

y_test_cls = y_test.astype(int)

Train a classifier using these sets and plot the confusion matrix.

The results does not look great, probably becuase the waveform it too confusing for the classifier. Therefore, we can extract specific features to help it filter out all the unnecessary informaiton.

def extract_features(waveform):

slope = np.diff(waveform)

pos_slope = slope[slope > 0]

neg_slope = slope[slope < 0]

features = {

"mean_val": np.mean(waveform),

"std_val": np.std(waveform),

"max_val": np.max(waveform),

"mean_pos_slope": np.mean(pos_slope) if len(pos_slope) > 0 else 0,

"std_pos_slope": np.std(pos_slope) if len(pos_slope) > 0 else 0,

"max_pos_slope": np.max(pos_slope) if len(pos_slope) > 0 else 0,

"mean_neg_slope": np.mean(neg_slope) if len(neg_slope) > 0 else 0,

"std_neg_slope": np.std(neg_slope) if len(neg_slope) > 0 else 0,

"max_neg_slope": np.min(neg_slope) if len(neg_slope) > 0 else 0, # most negative

}

return features

features_list = []

labels = []

for waveform, label in zip(X_train, y_train_cls):

feats = extract_features(waveform)

feats["num_pulses"] = label

features_list.append(feats)

df_features = pd.DataFrame(features_list)

We can generate the pairplot. Which feature do you think will be the most useful?

import seaborn as sns

import matplotlib.pyplot as plt

sns.set(style='whitegrid', context='notebook')

sns.pairplot(df_features, hue='num_pulses', corner=True, plot_kws={'alpha': 0.5})

plt.suptitle("Waveform Feature Clustering by Pulse Count", y=1.02)

plt.show()

Now, let’s refine the model to determine the number of pulses. Define the classifier model as clf:

from sklearn.metrics import classification_report, confusion_matrix, ConfusionMatrixDisplay, f1_score, roc_auc_score, roc_curve, auc

from sklearn.preprocessing import label_binarize

features_list = []

labels = []

for waveform, label in zip(X_test, y_test_cls):

feats = extract_features(waveform)

feats["num_pulses"] = label

features_list.append(feats)

df_test_features = pd.DataFrame(features_list)

X_test_feats = df_test_features.drop(columns='num_pulses')

y_test_feats = df_test_features['num_pulses']

y_pred = clf.predict(X_test_feats)

print(classification_report(y_test_feats, y_pred))

ConfusionMatrixDisplay(confusion_matrix(y_test, y_pred)).plot()

plt.title("confusion Matrix")

plt.show()

f1_scores = f1_score(y_test_feats, y_pred, average=None)

classes = np.unique(y_test_feats)

plt.figure()

plt.bar(classes, f1_scores)

plt.xlabel('Class (num_pulses)')

plt.ylabel('F1 Score')

plt.title('F1 Score per Class')

plt.xticks(classes)

plt.grid(True)

plt.show()

y_score = clf.predict_proba(X_test_feats)

y_test_bin = label_binarize(y_test_feats, classes=classes)

plt.figure()

for i, cls in enumerate(classes):

fpr, tpr, _ = roc_curve(y_test_bin[:, i], y_score[:, i])

roc_auc = auc(fpr, tpr)

plt.plot(fpr, tpr, label=f'Class {cls} (AUC = {roc_auc:.2f})')

plt.plot([0, 1], [0, 1], 'k--')

plt.xlabel("False Positive Rate")

plt.ylabel("True Positive Rate")

plt.title("ROC AUC per Class")

plt.legend(loc="lower right")

plt.grid(True)

plt.show()

This is a lot better, except it doesn’t predict the energy height. Let’s move on to the last part:

Question 6 - Advanced approach#

Advanced ML: Train a model to predict the energies of piled up trapeziods and plot a new spectrum of this data. Compare with the actual spectrum.

References#

[1] Jordanov, Valentin T., and Glenn F. Knoll. “Digital synthesis of pulse shapes in real time for high resolution radiation spectroscopy.” Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, vol. 345, no. 2, June 1994, pp. 337–345, https://doi.org/10.1016/0168-9002(94)91011-1.

[2] A. T. Yue et al 2018 Metrologia 55 460, DOI 10.1088/1681-7575/aac283

[3] A. T. Yue et al. Phys. Rev. Lett. 111, 222501 DOI: https://doi.org/10.1103/PhysRevLett.111.222501

[4] F. M. Gonzales et al. Phys. Rev. Lett. 127, 162501 DOI: https://doi.org/10.1103/PhysRevLett.127.162501

Acknowledgments#

Initial version: Initial version: Calvin Nettelhorst and Ryan Huang with some guidance from Mark Neubauer

© Copyright 2025